This article was 100% written by a human being (me). And you may start to see more content authenticity statements like this in the near future, thanks to new AI disclosure regulations. Keep reading to find out how these requirements could impact your agency.

80% of brands are worried about how their creative and media agency partners are using GenAI. And I think their concerns are valid.

Left unaddressed, these seeds of doubt can easily turn into distrust and fracture client-agency relationships.

Ethical considerations aside, there are compelling legal reasons to disclose your agency’s use of AI. The biggest one is copyright protection.

We’ve seen several cases in the US where the courts have ruled that only human-created work can be protected by copyright (more on that below).

Agencies are stuck between a rock and a hard place: clients now expect faster turnaround times, thanks to AI. But where’s the line with AI use? And how can you protect yourself and your clients from copyright issues?

I went digging to find out:

- How agencies are preparing for changes to AI disclosure and copyright protection requirements

- How the EU AI Act and similar laws will impact the future of agencies

- The biggest perils and opportunities for agencies in an AI world

Stick around for my seven predictions on how the EU AI Act will change how agencies work. Plus, advice from boots-on-the-ground agency owners on both sides of the AI fence.

Here’s your line-up:

- Ashley Gross, AI Consultant featured in Forbes and MSN

- Guido Ampollini, CEO and Founder of GA Agency

- Iryna Melnyk, Marketing Consultant at Jose Angelo Studios

- Justin Belmont, CEO of Prose

- Justin Mauldin, Founder and CEO at Salient PR

- Natalie Cisneros, Creative Managing Partner at Jekyll Works

- Steve Mudd, Founder and CEO of Talentless AI

But first, a story.

How a monkey selfie set the scene for AI copyright

Photographer David Slater was snapping pictures in Tangkoko reserve in Indonesia when a macaque monkey called Naruto picked up the camera and took a selfie.

The selfie that launched a thousand copyright questions.

When Slater tried to copyright the photograph, animal rights group PETA decided to sue Slater on Naruto’s behalf. PETA argued that the monkey should own the copyright to the photo since she took it.

The judge ruled that only human work can be protected under copyright. That meant neither Slater nor Naruto could claim the copyright.

This case set the scene for AI-generated work.

If your content is created by a machine (i.e. not a person), it’s currently not eligible for copyright. Importantly, if you use AI as part of the process but a human creates the final output, it can be protected by copyright.

Co-founder of Trust Insights, Christopher Penn, highlights why this distinction is so important:

AI has muddied the waters – your agency needs to protect itself and your clients

New AI disclosure requirements in Europe have already started drawing lines in the sand. For agencies using AI, I think this is a huge wake-up call. If you don’t disclose where and how you use it, your clients could try to falsely claim copyright on AI content.

Or the opposite could happen.

Let’s say I use AI-generated images in this article. Since they’re machine-generated, anybody can take and use them without my permission. That’s just the way it is.

But this could lead to a bigger issue. People might assume that I used AI to write the post, too. Next thing I know, people are lifting the full article even though the text is my work (and therefore copyright protected).

It’s bad enough for brands, but it’s a seriously uncomfortable conversation to have with your clients.

When you disclose what is AI-generated, you actually protect human-created work from being ripped off.

As AI consultant Ashley Gross says, “It’s simple: people deserve to know when AI is behind decisions or creating content.” And I couldn’t agree more.

P.S. I asked ChatGPT to create an image illustrating the dangers of agencies not disclosing AI use to clients. Here’s the output – feel free to take this image if you enjoy typos. The rest is copyrighted.

AI-generated image showing … not much.

The EU AI Act is a sign of what’s to come for agencies

Calls to regulate AI use are getting louder as ethical lines are being crossed. The EU AI Act is a good example of where we’re headed. Despite only affecting the European market, it has become the blueprint for the rest of the world.

So what is it? And more importantly, how does it affect your agency?

The Act was ratified into European law on February 2nd, 2024. According to the European Parliament, generative AI must now meet the following transparency requirements:

- Disclosing that the content was generated by AI

- Designing the model to prevent it from generating illegal content

- Publishing summaries of copyrighted data used for training

For agencies, there are two major implications:

- Transparent disclosure – You need to directly notify users when you’re using AI

- Content authentication – You must include clear marking to identify synthetic content e.g. metatags, watermarks, content labeling

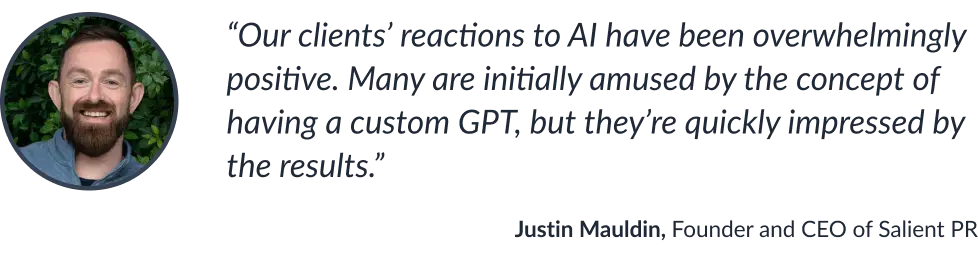

Meta has already introduced AI content labeling based on feedback from the Oversight Board.

Source: Meta

Conformity assessments will begin in August 2025, meaning the clock is ticking for your agency to make sure you’re complying. If not, you risk fines and reputational damage.

If your agency operates outside of Europe, you may think this doesn’t apply to you. But you could be wrong.

Being transparent and disclosing AI use now prepares you in case a similar law is passed in your market. If not, your agency could face a huge backlog of work to review once similar requirements come into law.

And don’t forget: The EU AI Act applies to any services used by EU citizens. Even if you don’t do business there.

Now for my predictions. Here’s how I think the EU AI Act could change the way agencies work.

Prediction one – Agencies will have a dedicated AI consultant

Regulation is one of the biggest conversations surrounding AI at the moment. While some are crying out for proper regulations, others think it’s an impossible task and resistance is futile. Even with the introduction of laws like the EU AI Act, it may be too late to implement any real form of moderation.

But that doesn’t mean you should rest on your laurels. There are still plenty of ways to promote responsible AI use that won’t land you in hot water with your clients.

For marketing consultant Iryna Melnyk, it’s all about building transparent and authentic communication.

Agencies will feel the pressure to over-communicate how they’re staying on top of new regulations and how they’re using AI.

I think this will become a full-time role down the line. We’re seeing new positions opening up within organizations to manage AI, and agencies may follow suit. That could be an internal or external AI consultant whose role is to make sure your teams are following best practices and regulations.

Prediction two – Content authenticity badges will become the norm, but people won’t care

We’ve already seen Meta’s updated content labels. And I think this will kick into overdrive once the initial provisions of the EU AI Act come into full force.

We’ll see more agencies use “No AI” statements and content authenticity badges to strengthen trust with their clients. And while I think this small gesture could be a quick win in times of widespread misinformation, I don’t think it will matter in the long term.

Source: No AI icon

The stigma around AI content is disintegrating quickly. Sooner or later, the questions about whether AI-generated content is “good” or “bad” will dry up. Clients will want to know if AI is used for compliance and copyright reasons, but there will be less pushback around quality.

Of the eight agency owners I spoke to, they all agreed that clients have mostly reacted positively to AI.

Justin Mauldin says his agency has embraced AI as part of its daily operations and has even built custom GPT models for clients.

He’s seen an overwhelmingly positive response from clients. His team has even shared AI-generated content without immediately disclosing its origins just to gauge clients’ blind feedback. Justin says they are always amazed.

Then we have Steve Mudd. His next-gen agency is currently working on a project that will rely almost exclusively on synthetic media. Here’s how he plans to approach the disclosure process:

- Publish a GenAI media disclosure statement on the website

- Add a disclaimer in the description of videos (and potentially onscreen)

- Disclose the use of synthetic voices

But Steve makes a valid point. Almost everything we see is already doctored in some way without us knowing, so he thinks these disclosure requirements won’t stand the test of time. I think he’s onto something.

My two cents? Content authenticity badges will become another box to check in the creative review process to keep your work compliant. But, clients will soon lose interest as long as your AI use is transparent and above board.

Prediction three – AI content labels could end up misleading us even more

The EU AI Act means content labeling is inevitable. But these labels are already getting confusing.

Let’s take Meta as an example.

It has updated its labeling criteria three times between May and September 2024 to meet European standards. The problem? It still isn’t clear when labels are required and what they actually mean. When we consider Meta will penalize users who don’t correctly label AI content, this is a big deal.

Source: Meta

Meta’s original “Made with AI” label was problematic because content made by humans (with minor tweaks by AI tools) was incorrectly categorized as AI-generated. That led to the “AI info” label which people can click for more information about the content.

But this changed again.

When Meta detects content has only been modified or edited by AI, the “AI info” label only appears in the post menu. It also uses “Imagined with AI” labels for photorealistic images made using its AI feature and wants to do this for content created with other tools.

AI labeling is required in some cases, like when content is “photorealistic videos, realistic-sounding audio, or content resembling real-life scenarios.” In others, it’s encouraged but not mandatory.

Despite moves to standardize AI labeling, I see it becoming confusing and hard to regulate.

It could also be complicated if your agency uses AI to generate or adjust creative assets that fit into a larger context. For instance, let’s say you use AI for a single asset in a campaign. Would the whole campaign need an AI label?

Prediction four – Compliance will slow down review and approval

We’re flooded with conversations about how AI has impacted creativity. But there’s another big question agencies should be asking: What does it mean for compliance?

The short answer is longer content review cycles.

If the EU AI Act is a sign of what’s to come, AI compliance will be a major talking point for agencies in the next few years. And the learning curve starts now.

So, I asked Ashley Gross what agencies should be doing. She suggests starting with getting the right systems in place.

Once you’ve nailed this, Ashley recommends taking the following steps:

- Step one – Train staff on new laws like the EU AI Act and how their roles fit into compliance

- Step two – Include compliance clauses in contracts to reduce liability

- Step three – Use solid methods to clearly label AI-generated content, like watermarks, metadata tags, or cryptographic signatures

Guido Ampollini seconds this. He urges agencies to start training client-facing teams on how to clearly communicate these changes. He also encourages agencies to conduct internal AI audits.

And if you think this is just a compliance exercise, think again. As Ashley reminds us, this is about building and maintaining healthy client relationships.

“Keeping your documentation solid, user notifications clear, and risks managed upfront isn’t just about compliance—it’s about earning trust.”

Guido agrees. He argues that the EU AI Act will strengthen client-agency relationships by making transparency a top priority.

Prediction five – AI could become the new “photoshopping” or a misperceived silver bullet

Natalie Cisneros recently reminded me of how “photoshopping” became widely perceived in a negative light.

When you think of “photoshopping”, you probably think of dodgy edits and fake influencer images. Natalie argues this perception comes from a misunderstanding of how the majority actually use the tool. And this misunderstanding forced many people to mask their use of Photoshop to some degree.

AI could face a similar fate, becoming socially synonymous with inauthenticity. I think the best way to combat this is more transparency and better education. As an agency, educating clients about AI will become a big chunk of your work.

The pendulum could swing the other way, too.

There’s a widespread perception that AI is a silver bullet. This can give your clients unrealistic expectations and lead to time-wasting or delays. I predict that creative directors will find it tricky to balance “selling” clients on AI without promising them the world.

Prediction six – Agencies will need to put their AI stake in the ground

If you spend any time on LinkedIn, or the Internet in general, you’ve probably noticed there are two AI camps: the evangelists and the haters.

Agencies are reaching the point where they need to put a stake in the ground and pick a side. This could well become a huge part of your differentiation going forward.

Either way, Justin Belmont argues that the EU AI Act is forcing agencies to get serious about their stance.

Justin’s team has implemented a “human-first” AI policy, meaning all content goes through a thorough human vetting process.

Steve Mudd is doing the opposite with his “AI-first” agency. Despite the perils, he thinks the possibilities are endless for marketers, brands, and agencies.

Here are some of his favorite “wholesome” examples of synthetic media:

- The rapper DOC lost his voice in a tragic accident and is using synthetic media to create a new album

- Randy Travis had a stroke that left him unable to sing, so he’s using synthetic media to create new music

- NBC created an AI-generated Al Michaels to share news during the Paris Olympics

Al Michaels discusses AI using his voice for the Paris Olympics

Steve’s argument is more nuanced than pegging AI as “good” or “bad” since it can be used for both. He gives the example of a collective of Venezuelan journalists using synthetic avatars to protect themselves after government crackdowns on independent media. But, allegedly the government is using the same technology to spread propaganda.

Ultimately, it will all come down to media literacy. We (all humans, not just agencies) need to develop the skills to distinguish fake AI news from reality. And fast.

Prediction seven – Agencies need to put their money where their ethics are

Your agency has to cover its back by staying compliant with laws like the EU AI Act. But I think we’ll see a more potent side effect that goes way beyond compliance. Your stance on AI could become a big part of your brand values and message.

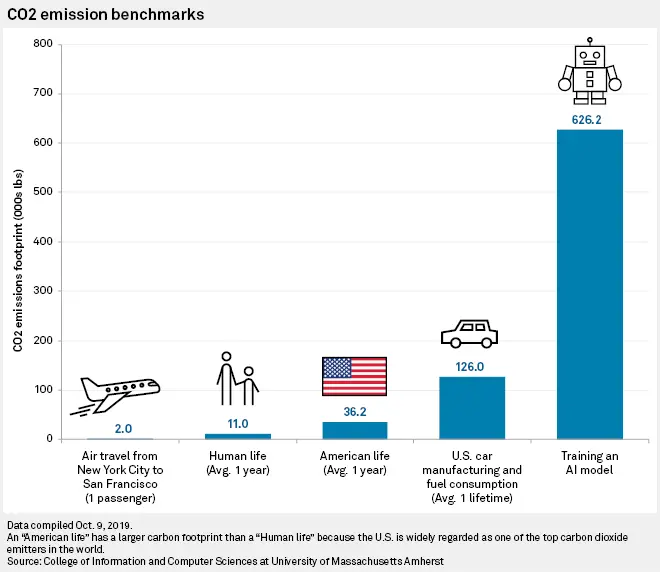

For instance, your agency will have a hard time positioning itself in the sustainability sector if you’re relying heavily on AI (which has a catastrophic carbon footprint).

Source: SP Global

And the same goes with the AI companies you choose to support. These buying decisions will be about much more than just picking software. They will become an ethical stance.

We’re standing on the precipice with AI. For every person who argues it will lower barriers of entry and democratize creative professions, another points out the inherent bias it already exhibits.

Associate Creative Director Clovis Siemens recently shared his concerns with me.

Let’s say you support an AI provider associated with deepfakes or other questionable practices. That could impact your agency and put certain clients off working with you.

Just look at how rampant deepfakes have already become.

In 2023, an AI-generated deepfake audio pretending to be London Mayor Sadiq Khan inflamed riots and protests across the UK.

This incident raised concerns about the dangers of synthetic media. In a Zeteo documentary, UC Berkeley professor, Hany Farid, warned that with reduced barriers to entry comes an increased threat vector.

“We talked about democratizing access to information. We are also democratizing access to disinformation”.

Farid also points out a significant flaw in the technology sector, claiming they “tend to privatize the profits and socialize the costs.”

He is one of many people calling for proper guardrails for AI companies. Whether that happens or not is a different story.

AI companies are being called out for not doing enough to prevent unethical use of their products. So, I predict agencies will need to do deep research when choosing AI providers to make sure they align with their values (and their clients’ values).

My take? Where your agency puts its AI fund will say a lot about your ethics.

Agencies: Are you ready for what’s to come?

I think this article asks more questions than it answers. And I have more: What happens when the majority of the content online is AI-generated? Would disclosure laws even matter? Will progress grind to a halt if AI doesn’t have human work to feed off?

But that’s where we’re at with AI. As Steve Mudd says, there’s no hard and fast rule for how to disclose this information. There isn’t a battle-tested playbook yet. We’re all trying to learn fast.

These are my predictions for agencies, based on my conversations with people on the frontlines. But who knows what the future holds?

One thing is for sure, though. New regulations like the EU AI Act will force the “head-buried-in-the-sand” and the “wait-and-watch” camps to start paying attention.

*Disclaimer: I am not a lawyer, and this isn’t legal advice. Please always check new law requirements with your legal team.